Although all new information technology presents risks, AI is perhaps one of the technologies that increases the magnitude of risks the most, both negative (due to its potential impact at the organisational and social level) and positive (due to the great opportunities it offers, for example, for decision support).

With regard to traditional IT risks, it should be noted that these evolve more rapidly in AI than in most other areas, giving rise to risks such as:

- Legal, when intelligent systems do not comply with current regulations.

- Ethical, when no thought is given to the use made of intelligent systems.

- Technical, when the software or hardware infrastructure does not ensure the robustness of intelligent systems.

In terms of legal aspects, the most notable is the European Union's AI Act, and at the national level in Spain, the ‘Anteproyecto de Ley para el Buen Uso y la Gobernanza de la Inteligencia Artificial’, which develops the penalty and governance regime provided for in the AI Act, adapting it to Spanish legislation.

Regarding ethical aspects, the technical report ISO/IEC TR 24368 Information technology — Artificial intelligence — Overview of ethical and societal concerns is highly relevant, pointing out that AI ethics is a field within applied ethics. It brings together various ethical frameworks and uses them to highlight the new ethical and societal concerns that arise with AI. It also provides examples of practices and considerations for creating and using ethical and socially acceptable AI. On the other hand, the two initiatives led by Juan Pablo Peñarrubia Carrión, Vice-President of the Council of Professional Associations of Computer Engineering in Spain (CCII), on this topic at CEN/CENELEC: «Guidelines on tools for handling ethical issues in AI system life cycle» and «Guidance for upskilling organisations on AI ethics and social concerns».

As for the technical side, there are international standards for evaluating, ensuring and improving the functionality and quality of intelligent systems. Among these, we highlight the importance of good software processes for developing AI systems (as reflected in the standard ISO/IEC 5338) and a quality model for AI software (standard ISO/IEC 25029) and data (standard ISO/IEC 5259).

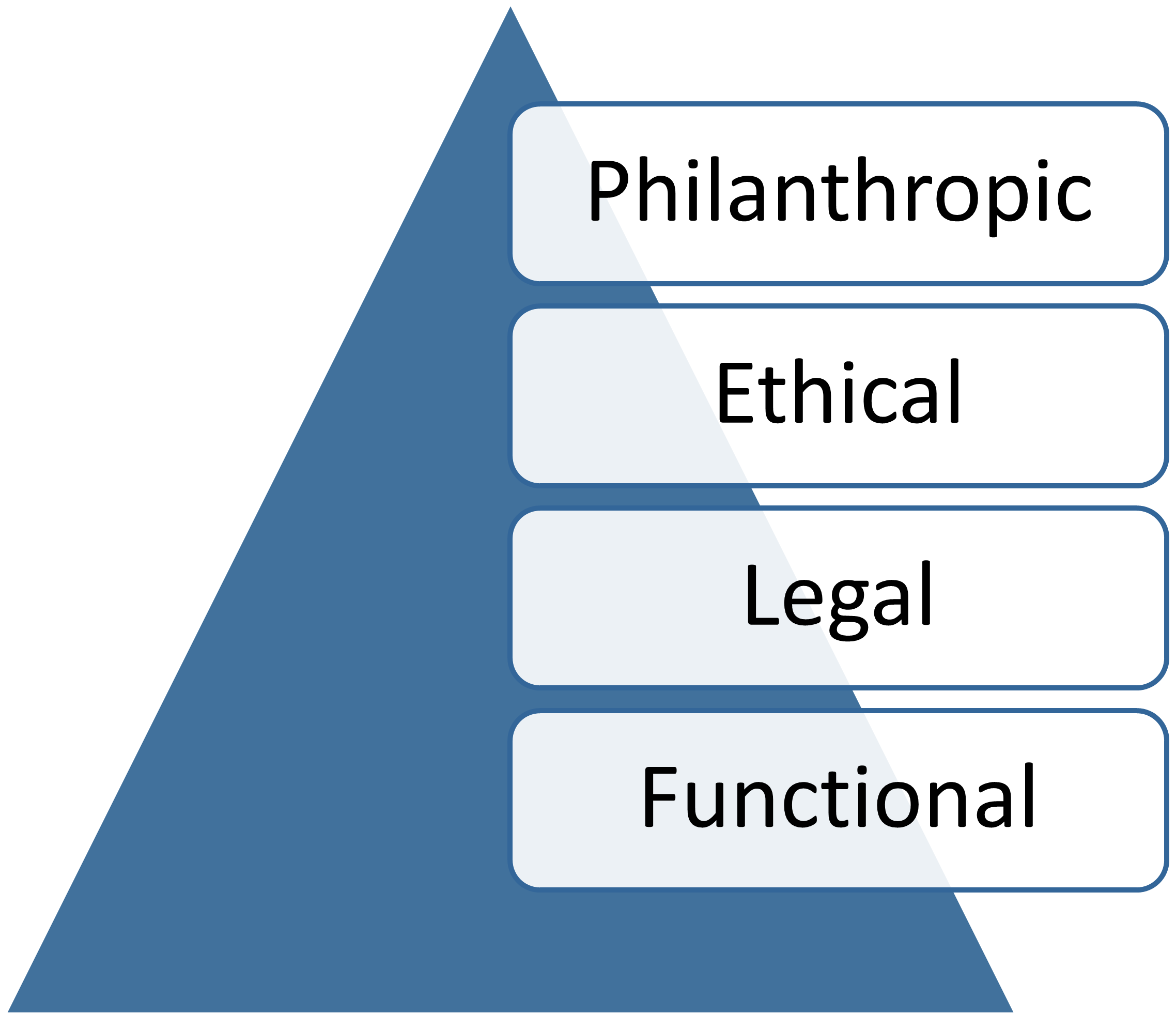

In the ‘Social Pyramid of AI’, its authors identify four components of AI social responsibility, starting with the basic notion that the functional suitability of AI underpins everything else. The next component, the legal one, requires AI systems to act in a manner consistent with government and legal expectations, meeting at least minimum legal requirements. At the next level, ethical responsibilities are the obligation to do what is right, fair and equitable, and to avoid or mitigate negative impacts on stakeholders. Finally, in terms of philanthropic responsibilities, AI is expected to be a ‘good citizen,’ contributing to addressing social challenges such as cancer and climate change.

As we can see, the basis of the entire pyramid rests on the fact that intelligent systems must be functionally adequate and meet minimum quality requirements, since otherwise it will be of little use if they comply with legal and ethical requirements.

We are convinced that with functional, high-quality AI, together with appropriate management and governance systems, intelligent systems will be able to fulfil their philanthropic function, which is ultimately the most important thing: that AI truly serves to improve the quality of life of people and their organisations.